How we got Trump right

My firm, J.L. Partners, had the most accurate election forecast of 2024. Here's how we did it

Over the last year I have spoken to a North Carolina fortune teller, scaled Nevada sand dunes with a swing voter, even sat for two hours with an Iowan nudist. I often questioned what I was doing.

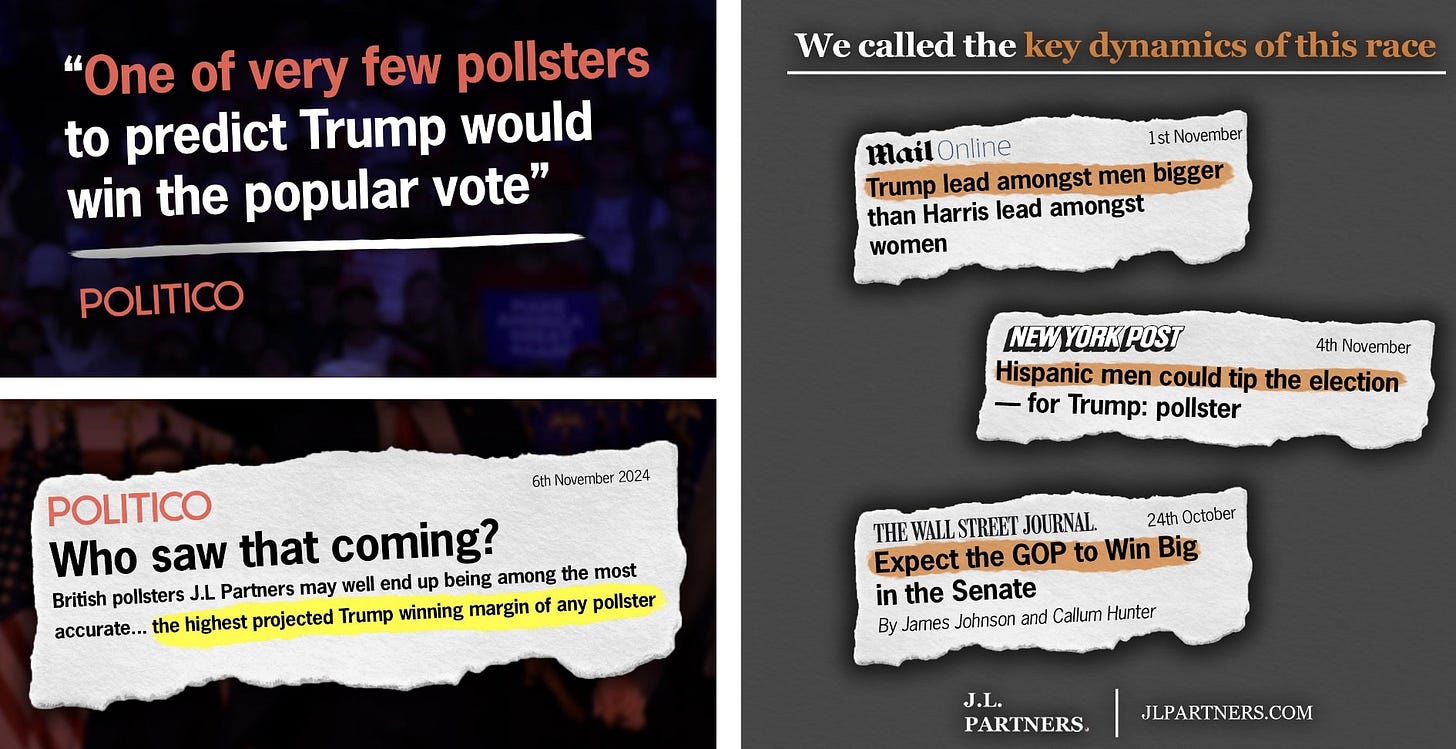

But it paid off. As verified by POLITICO, the firm I co-founded - J.L. Partners - was the most accurate pollster and modeling firm in the race, with its final model projecting a 287-251 win, the highest projected margin for Trump of all models and only one of two to forecast a Trump victory.

We called a Trump win since September 24th, and for the last 25 of 30 days of the campaign had a forecast of 312-226, where Trump ended up.

There were other successes for the firm:

Our final national poll also called Trump winning the popular vote, with a lead of 3 points. A Trump popular vote win was seen as an outlier and the final national polling average had a Harris lead of 1.2 points.

J.L. Partners also had the most accurate poll of Ohio, the main state we polled in due to our client base. NYT predicts this race will be a Trump win by 11 points and that Bernie Moreno wins the Senate with a margin of 6 points. Our final poll had Trump leading by 9 points and Moreno leading by 6 points, while the polling average showed a win for Sherrod Brown. This is the second cycle in a row that J.L. Partners is the most accurate pollster in Ohio, with our 2022 poll calling the Senate race to within 0.2 percentage points.

Finally, we called that the Senate would be more Red than anyone expected in the Wall Street Journal because of our model predicting the behavior of undecided voters.

How did we do it? We believe there are four key reasons.

1. A mixed method approach, essential in today’s information ecosystem.

Our view is that single-mode polls, whether online or live call, are liable to higher levels of bias and missing that key under-represented Trump voter in polls for three

cycles now. This does not apply universally, but tends to be the rule rather than the exception.

Phone polls are liable to over-sampling those willing to give pollsters the time of day on the phone: our statistics show that person is more likely to be an older, white, liberal woman (read: Selzer’s sample). Online polls over-sample people who are educated, more engaged, younger, and more likely to be working from home. Those groups are all more Democrat.

Instead what we did is blend methods, depending on the audience we are trying to reach using an in-house algorithm. We use live call to cell, live call to landline, SMS-to-web, online panel, and in-app game polling. Each method reached a different type of voter. The latter, for example, incentivizing poll responses by in-game points as people play games on their phone, is more likely to pick up young, nonwhite men. That is something that meant that 30% of our nonwhite sample backed Trump, in line with the exit polls we are seeing.

We pick up that elusive Trump voter who is less likely to trust polls or to have the time to fill them out.

2. Our sample design on non-voters, following the data, not herding.

Throughout the cycle, our methods kept giving us a significant number of 2016 and 2020 non-voters. In our final poll, that number was 17%; it was predominantly rural, and heavily Trump-voting.

Other pollsters, we know for a fact, scaled or weighted these numbers down. Surely they couldn’t be real, they assumed. But we did not herd or hedge and went with the data even where it challenged our assumptions. We combined this with 2020 recall voting, which acted as an anchor for the data, while also allowing flex to the quotas to match the reality of new developments. We were right to do so and a turnout surge helped Donald Trump with his most favorable demographics.

3. The decisions that went into our model, particularly no incumbency bonus for Kamala Harris.

When Harris joined the race, we made a decision: we would not award Harris a typical incumbency bonus that almost every other model did. We received flak for this, but it was the right call. Including one would have swung national win probabilities by around five points in Harris’ favor. In a race that polling suggested was going to be very close, this assumption made a big difference.

But this should not have been a surprise for modelers – Harris was not the incumbent. In fact, just as incumbents since 2020 around the world have been toppled one after another, at multiple points in the race our polling was showing the incumbent hurt her race.

We also made other decisions in our modeling. Early on, we decided to drastically upweight polls that asked full-ballot questions, including third parties. This is key. Voters are faced with all of these choices when they go into the voting booth, not just Harris v Trump, which a shocking number of state polls used right to their final poll. Using our research, we were able to model vote flows in states where third party candidates were on the ballot. Those third party candidates cut Harris off at the knees, especially amongst the Arab American vote in Michigan.

We also drastically down-weighted online-only panels, and up-weighted our mixed method polls. Without down weighting, these polls totally swamp the model as sample size matters in our modeling procedure. By hampering their contribution, we prevented potential systematic bias entering our model.

Finally - and a key part of our Senate model - we re-allocated undecided voters using a predictive model that was highly accurate in the UK election earlier this year. Trained from demographics and other answers that respondents gave in the survey, it gave Republican Senate candidates bigger leads in public polling that simply reassigned undecideds on self-reported lean, or removed them from the samples altogether.

4. Long, structured interviews with voters face-to-face.

We have always felt that qualitative research is essential alongside all the modeling and polling. Polling is a science, but also requires big call decisions on weighting and more - such as those above. These were not assumptions, but were based on hundreds of 90-minute face-to-face interviews with voters over the last two years.

They helped confirm to us that an incumbency bias in the model would be foolish, that we should not downweight our non-voters, that there was no late split to Harris amongst undecideds. To the financial people receiving this email, this might seem anecdotal, or about a gut reaction. But it was a data-led decision, simply based in qualitative interviews rather than quant analysis.

It was also key to understanding non-white voter switchers to Trump: a ten minute conversation would not capture their voting intention. They would start the interview leaning Harris, but end it leaning Trump – a better indication of where they would end up landing after their world view on the economy, border, and family values became clear.

There is always room to improve. No poll can ever be perfect. But those are the ingredients that made J.L. Partners the most accurate pollster and modeling firm in the U.S., adding to its 2024 success in being the third-most accurate pollster in the UK General Election.

The election is over but this substack lives on, as I continue to run interviews, speak to voters, and conduct polling on not just the Trump administration - but what the Next America after it looks like too.